First experiences with PBG to PBR

From the time it was first announced, I really liked the idea of converting a PBG (partition by growth) tablespace to PBR (partition by range). This is one of those features that instantly gets our attention and is a future “hey, I want to do that once I am able to do so!” According to the manuals, this feature is available at FL500 of v13. So, it’s an immediate win once you achieve migration and ability to use new features. It was one of the top features we were most anticipating.

Even before the announcement, I think many of us have encountered tablespaces that in hindsight should have been created as PBR to begin with. Some of us will even go further and admit to creating those PBGs.

I inherited one of these and noticed it was getting larger according to our storage report. It’s been on my list and once we achieved v13 I wanted to give it a try. I let a coworker experiment with it first before I gave it a try. Once I saw the advancement, he had made I knew it was my turn.

Admittedly, I don’t know why I waited! It was an easy process with just a simple alter and reorg (and rebind).

The table I was working on was member (customer) facing. It held important information and was quite large at 1.6 billion rows. It had grown to 3 partitions out of the 5 maxpartitions we had set. These were 64 gig partitions so were healthy size. The funny part is that it’s not even reached it’s 5-year retention period. So, data will continue to grow. We have already made extension on MAXPARTITIONS, so we certainly don’t want to do that again.

Below I will detail the steps I took to make this change. I have done so in lower environment and have scheduled the production implementation in the future.

-

Determine partitioning key.

This might be an obvious choice yet sometimes it isn’t. Or maybe you know the appropriate key but aren’t quite sure of the correct values. You have two choices here.

- Run various queries and determine the values you want to use.

- Fully test it by creating a copy in lower environment. You can load a similar table (created as PBR) with an unload from image copy to ensure you are working with good data. Then do a reorg rebalance. This will ensure you are getting good values to use plus verifying the data will fit in the partitions you have selected. I chose to do this option. The reorg rebalance did a perfect job of spreading the data. I wanted best confirmation plus I wanted to work ahead while we were waiting to migrate to v13.

-

Alter table.

ALTER TABLE schema.table_name

ALTER PARTITIONING TO

PARTITION BY RANGE (partitioning_column)

(PARTITION 1 ending at (‘1’)

, PARTITION 2 ending at (‘2’)

, PARTITION 2 ending at (‘3’)

, PARTITION 2 ending at (‘4’)

, PARTITION 2 ending at (MAXVALUE));

What surprised me most is that even if I knew the highest value that the column could hold, I still had to define the last partition as MAXVALUE. This ensures that there is a place for all data to reside. It seems obvious enough yet that caught me off guard when I received the -650 reason 55. The documentation is quite clear, yet I didn’t catch this requirement.

-

Reorg

This is where you apply the pending changes via reorg.

-

Rebind (if applicable)

This change will invalidate your packages so take note of all dependent packages and rebind accordingly.

REBIND PACKAGE(collection.sp_package.(sp_version01)) APRETAINDUP(NO)

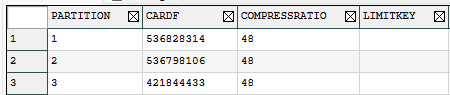

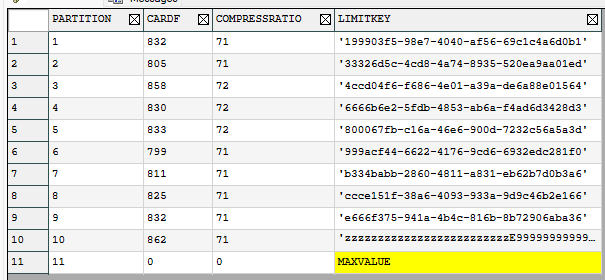

Before:

After:

Query:

SELECT TP.PARTITION

, BIGINT(TP.CARDF) AS CARDF

, TP.COMPRESSRATIO

, TP.LIMITKEY

FROM SYSIBM.SYSTABLEPART AS TP

WHERE DBNAME = 'dbname'

AND TSNAME = 'tsname'

Overall, this feature is fantastic with little effort or risk. Due to the use of the table in question, I will hide my change in production behind a planned maintenance outage later this year.

I’ll caution your enthusiasm with a word of caution. Please check with your Db2 Systems Programmer to ensure you have PH54836 installed. This did cause me some issues in production. You’ll want to delay your implementation until you have confirmed this important fix is installed.

This year at IDUG in Charlotte, I heard the possibility that Db2 would implement a feature to go from PBR to PBG. It took me a moment to consider why that would ever be considered. It seems that PBR is far superior with larger tables. The answer was simple yet not obvious. There could be tables that are partitioned but you don’t like the partitioning key. So, if the feature was available, you could convert back to PBG then choose a different partitioning key and then convert to PBR. Genius. I think it will be a rarely used feature but if you’re stuck in that situation, it would be nice to have an easy way out.

I hope you take advantage of this new feature. I am confident you have PBGs that would be better off if they were designed as PBRs.

As always, if you have any feedback, let me know!

References:

- Converting tables from growth-based to range-based partitions

- Changes that invalidate packages

(keep this link handy for all sort of changes you plan to make!) - ALTER Table statement

- Syntax and options of the REORG TABLESPACE control statement

- PH54836