pureScale with Pacemaker - Chapter 1: The Big Picture

by Justina Srebrnjak

It’s finally here! The news you’ve all been waiting for... Db2 support of Pacemaker with pureScale is now available for Linux operating systems in Db2 12.1.0 and beyond! In this new version, Pacemaker becomes the replacement for Tivoli System Automation & MultiPlatforms (a.k.a. TSA) as the integrated cluster manager for pureScale deployments. Since IBM started this journey of transitioning from TSA to Pacemaker for all integrated HA solutions on Linux environments, we've been talking about the benefits of Pacemaker: providing a strong support for cloud environments, improving performance, simplifying problem determination, and simplifying the cluster software architecture, among others. We have talked extensively about these benefits throughout ‘The Book of Db2 Pacemaker’ series, so you can go back to the first in the series ‘The Book of Db2 Pacemaker – Chapter 1: Red pill or Blue pill? to recall the whole discussion.

In this blog, we will be diving into the inner workings of the pureScale resource model with Pacemaker! This includes explanations of the components that make up the resource model and how they interact together to ensure the cluster stays available and healthy. Let’s get started!

What is a resource model?

Before we get into the specifics of pureScale, it is important to understand what I’m talking about when I say “an HA resource model”. An explanation of a Db2 HA resource model, common to all HA solutions, was previously presented within The Book of Db2 Pacemaker – Chapter 3: Pacemaker Resource Model: Into the Pacemaker-Verse. As a refresher course, I will reiterate the key details here.

A Db2 HA resource model is a plan to ensure the HA setup (i.e. pureScale configuration in this case) stays up and running. In the case of pureScale, this is a fundamental piece of the data-sharing architecture, as it ensures that recovery is completed quickly and automatically to maintain the availability of the cluster. To do this, components of the setup, called resources, are monitored by the cluster manager. In the case where unusual activity is observed for a resource, the resource model describes certain actions to be taken to ensure the cluster stays healthy. For example, if a software or hardware failure is observed on a host and the Db2 member fails, the cluster manager will attempt to restart the member on the same host or failover on to a different host, following the recovery procedures outlined in the resource model.

Let’s break down all the ingredients in a Db2 HA resource model with Pacemaker:

- Resources – these are components needed to be monitored to ensure the health of the cluster.

- Resource agents – these are opensource UNIX shell scripts modified and maintained by Db2 (users should NOT tamper with this or risk voiding your support contract). These scripts are responsible for monitoring the resources and taking actions to recover from specific failure (i.e. starting and stopping the resource). It is important to highlight that for all Db2 Integrated Pacemaker solutions, only the Db2 supplied version of the resource agents can be used. The vanilla open-source ones or any other third-party ones are not supported as part of the integrated solution.

- Constraints – these are relationships among the resources defined by Db2. They have profound impact on the recovery behaviors. Later in this blog, we will discuss these in more detail. We will leave a more detailed discussion of the role these play for a future blog post where we walk through examples of failure scenarios and their recovery paths.

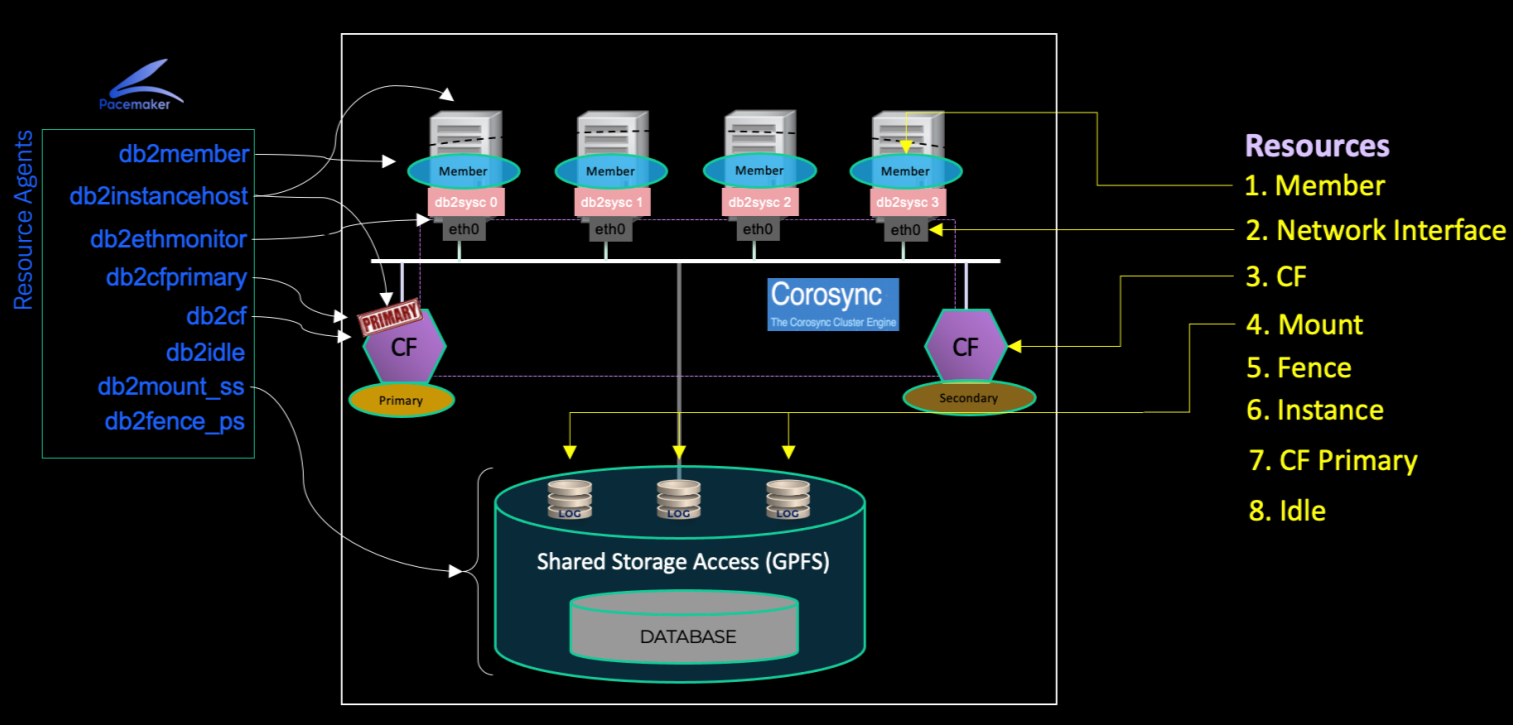

Now that I have gone through all the general aspects of a resource model, it’s time to get specific with pureScale. The diagram below highlights all the resources in a pureScale resource model with Pacemaker and where they belong within the pureScale configuration. Each resource will be explained in the next section.

Resources EXPLAINED!

From a quick glance at the above diagram, the pureScale resource model looks complicated, with lots of resources to keep track of. Once understood, the resource model is much simpler than it appears, with each resource handling an aspect of the pureScale configuration. Each resource and their respective resource agent are described in detail below:

- CF – A CF resource represents a cluster caching facility known as a CF in a Db2 pureScale instance. In order to ensure the availability of this critical component, pureScale supports 2 CF resources in the cluster, with one designated as the Primary and the other one as Secondary. Note that the CF resource is defined as a fixed resource and can only be active on the designated CF host. The resource agent script for the CF resource is called db2cf.

- CF primary – The CF primary resource represents the current primary CF role within the Db2 pureScale instance. Unlike a CF resource, the primary CF resource that represents a logical role within the CFs is allowed to fail over to another CF host, transferring that role from one CF to the other. The resource agent script for the CF primary resource is called db2cfprimary.

- Member – A member resource is created for each pureScale member in the Db2 pureScale instance. This resource will be used to control the Db2 member on either the home host (i.e. preferred host where the member should run) or a guest host (when animating an idle member to become a regular Db2 member). Later in the blog, we will go over how a member’s home host is specified. The resource agent script for the member resource is called db2member.

- Idle – The idle resource represents a pureScale idle member. There are 3 idle member resources on each member host, using well-known member IDs 997, 998, and 999. These resources assist in member crash recovery. For example, when the home host for the Db2 member is unavailable, the Db2 member is able to take over the idle member temporarily until it can fail back to the home host. The resource agent script for the idle resource is called db2idle.

- Fence – The fence resource is new with Pacemaker (previously bundled within other resources with TSA). When a host unexpectedly leaves the cluster, or a STOP action has failed for a resource, the fence resource will block the failed host from being able to access the cluster shared resources, preventing data corruption. Once the failed host is online and healthy, the fence resource allows the host to rejoin the cluster and access shared resources. The resource agent script for the fence resource is called db2fence_ps.

All the resources below are a special type of resource called resource clones. A resource clone is a resource that can be concurrently active on multiple nodes. For example, the shared file system needs to be active on all hosts at the same time, while a CF can only be active on a single host.

- Instance – The instance resource is used to help with planned maintenance in which we want to keep all Db2 resources in the stopped state, and from being (inadvertently) restarted while updating the software on the host. It will monitor the instance status on each host within the cluster. The resource agent script for the instance resource is called db2instancehost.

- Network interface - Network monitoring for both public and private networks is done through the network interface resource. Db2 automatically creates these ethernet monitoring resources for all network interfaces used by Db2. The resource agent script for the network interface resource is called db2ethmonitor.

- Mount - The mount resource is used to monitor each host’s accessibility to the instance shared file system and each member’s accessibility to databases. The resource agent script for the mount resource is called db2mount_ss.

Now that you know all the resources that make up the pureScale resource model, it’s time to view the resource model in its entirety! For a detailed view of the pureScale resource model, the command db2cm –status can be used to monitor the state of all hosts and resources (for more information about the Pacemaker specific db2cm command, you can refer to the documentation page The Db2 cluster manager (db2cm) utility). A sample resource model with the instance name db2inst1 and hosts ps-srv-1, ps-srv-2, ps-srv-3, and ps-srv-4 is shown below:

|

Node List: * Online: [ ps-srv-1 ps-srv-2 ps-srv-3 ps-srv-4 ] Full List of Resources: * db2_cf_db2inst1_128 (ocf:db2:db2cf): Started ps-srv-1 * db2_cf_ db2inst1_129 (ocf:db2:db2cf): Started ps-srv-2 * db2_member_ db2inst1_0 (ocf:db2:db2member): Started ps-srv-3 * db2_member_ db2inst1_1 (ocf:db2:db2member): Started ps-srv-4 * db2_cfprimary_ db2inst1 (ocf:db2:db2cfprimary): Started ps-srv-1 * db2_idle_ db2inst1_999_ps-srv-3 (ocf:db2:db2idle): Started ps-srv-3 * db2_idle_ db2inst1_998_ps-srv-3 (ocf:db2:db2idle): Started ps-srv-3 * db2_idle_ db2inst1_997_ps-srv-3 (ocf:db2:db2idle): Started ps-srv-3 * db2_idle_ db2inst1_999_ps-srv-4 (ocf:db2:db2idle): Started ps-srv-4 * db2_idle_ db2inst1_998_ps-srv-4 (ocf:db2:db2idle): Started ps-srv-4 * db2_idle_ db2inst1_997_ps-srv-4 (ocf:db2:db2idle): Started ps-srv-4 * Clone Set: db2_ethmonitor_ db2inst1_eth1-clone [db2_ethmonitor_db2inst1_eth1]: * Started: [ ps-srv-1 ps-srv-2 ps-srv-3 ps-srv-4 ] * Clone Set: db2_instancehost_ db2inst1-clone [db2_instancehost_db2inst1]: * Started: [ ps-srv-1 ps-srv-2 ps-srv-3 ps-srv-4 ] * Clone Set: db2_mount_db2fs_sharedFS-clone [db2_mount_db2fs_sharedFS]: * Started: [ ps-srv-1 ps-srv-2 ps-srv-3 ps-srv-4 ] * db2_fence (stonith:db2fence_ps): Started ps-srv-1

|

Just as a reminder, most day-to-day operations do not require the detailed output from the previous command. For high-level monitoring, you can view CF and member statuses/alerts using db2instance -list and db2cm -list –alert. For more information regarding pureScale monitoring, refer to the documentation Interfaces for retrieving status information for Db2 pureScale instances.

Old vs. New

You might be wondering how the Pacemaker resource model differs from the current TSA one. Rest assured that the existing pureScale functionality is maintained with Pacemaker. Although a few differences exist, one cosmetic difference between the cluster managers is the differing resource names. The table below illustrates how the TSA resource names correspond to the new Pacemaker resource names.

|

Resource |

Example TSA Resource Name |

Example Pacemaker Resource Name |

|

CF |

ca_db2inst1_0-rg |

db2_cf_db2inst1_128 |

|

Member |

db2_db2inst1_0-rg |

db2_member_db2inst1_0 |

|

Idle |

idle_db2inst1_999_ps-srv-3-rg |

db2_idle_db2inst1_999_ps-srv-3 |

|

CF Primary |

primary_db2inst1_900-rg |

db2_cfprimary_ db2inst1 |

|

GPFS Mount |

db2mnt-db2fs_sharedFS-rg |

db2_mount_db2fs_sharedFS-clone |

|

Network Interface |

db2_public_network_db2inst1_0 |

db2_ethmonitor_ db2inst1_eth1-clone |

|

Instance |

instancehost_regress1-equ |

db2_instancehost_ db2inst1-clone |

|

Fence |

Not existent in TSA |

db2_fence |

|

CF Control |

cacontrol_regress1_equ |

No longer required for Pacemaker |

Further differences between the TSA and Pacemaker resource models, such as quorum policies, will be discussed in subsequent blogs. If you are eager to get acquainted with the new quorum policies, visit IBM documentation Quorum policies supported on Pacemaker to learn more.

How does Pacemaker know how to keep the cluster healthy?

Pacemaker decides where resources run and how to handle resource failures by referring to defined constraints between resources. There are three types of Pacemaker constraints that Db2 utilizes to build our pureScale resource model.

- Location preference constraint – defines on which host the resource is preferred to run on.

- Location rule constraint – restricts where a resource is allowed to run. Db2 commonly uses this constraint to block/avoid a resource from running on a host and the constraints are often conditional.

- Ordering constraint – defines a dependency of one resource on another. In other words, this constraint defines the order in which starting or stopping actions between 2 resources should occur.

The following examples show an instance of how these constraints are used within the pureScale resource model. Note that the example constraints below do not make up the complete list of constraints used within the resource model. We will continue to discuss pureScale resource model constraints in subsequent blogs to give you a more complete picture. Note that the command, cibadmin --query -o configuration, can be used to view the complete list of resources and constraints displayed in the format found in the examples below (i.e. XML format).

Db2 Member resource with Location Preference Constraint

As previously mentioned, Db2 members have a preferred host to run on which is referred to as the “home host”. The location preference constraints are used to implement this by telling Pacemaker the following: Db2 member 0 (resource name db2_member_db2inst1_0) can run on either host ps-srv-3 and ps-srv-4, but will run on ps-srv-3 if it is active, as this is its designated home host.

<rsc_location id="pref-db2_member_db2inst1_0-runsOn-ps-srv-3" rsc="db2_member_db2inst1_0" resource discovery="exclusive" score="INFINITY" node="ps-srv-3"/>

<rsc_location id="pref-db2_member_db2inst1_0-runsOn-ps-srv-4" rsc="db2_member_db2inst1_0" resource-discovery="exclusive" score="100" node="ps-srv-4"/>

The higher score value represents greater location priority (infinity is greater than 100). Also, discovery=“exclusive” is used to restrict resource discovery to be run only on these nodes. For Db2, we use discovery=“exclusive” because a Db2 member resource only runs on one of the Db2 member hosts.

Db2 Primary CF resource with Location Rule Constraint

In the case of the Db2 primary CF resource, since it is a logical role associated with a running CF process, we cannot simply assign it to run on a preferred host with a location preference constraint like in the example above. Instead, we use a location rule constraint to restrict where the Db2 primary CF resource can run based on the CF processes. The constraint below specifies that the primary CF resource cannot run on a host where there is no active CF (neither db2_cf_db2inst1_128 or db2_cf_db2inst1_129 resources are running).

<rsc_location id="dep-db2_cfprimary_db2inst1-dependsOn-cf" rsc="db2_cfprimary_db2inst1">

<rule score="-INFINITY" id="dep-db2_cfprimary_db2inst1-dependsOn-cf-rule">

<expression attribute="db2_cf_db2inst1_128_cib" operation="ne" value="1" id="dep-db2_cfprimary_db2inst1-dependsOn-cf-rule-expression"/>

<expression attribute="db2_cf_db2inst1_129_cib" operation="ne" value="1" id="dep-db2_cfprimary_db2inst1-dependsOn-cf-rule-expression-0"/>

</rule>

</rsc_location>

Within the constraint, the expression attributes represent the resource state where 1 is started and 0 is stopped. The commonly utilized negative INFINITY score implies that the host must be avoided.

Db2 Member resource and shared GPFS mount resource with Ordering Constraint

In the case of the shared file system, it must be available on all hosts before any resource can run. For example, the following ordering constraint ensures that the shared GPFS mount resource must be started before all Db2 member resources (i.e. both db2_member_db2inst1_0 or db2_member_db2inst1_1).

<rsc_order id="order-db2_mount_db2fs_sharedFS-clone-then-db2_member_regress1_0" kind="Mandatory" symmetrical="true" first="db2_mount_db2fs_sharedFS-clone" first-action="start" then="db2_member_regress1_0" then-action="start"/>

<rsc_order id="order-db2_mount_db2fs_sharedFS-clone-then-db2_member_regress1_1" kind="Mandatory" symmetrical="true" first="db2_mount_db2fs_sharedFS-clone" first-action="start" then="db2_member_regress1_1" then-action="start"/>

Note that kind="Mandatory" is used to indicate that this is a required condition. The result of this key word is that member resources can only run on a host if the shared GPFS mount resource is active. If, for any reason, the shared GPFS mount resource becomes offline, the member resources will also be stopped.

Transitioning from TSA to Pacemaker

This section brings us to the end of high-level information you need to know about the pureScale resource model! The next step is making the transition from TSA to Pacemaker. If you already have a pureScale cluster deployed with TSA, follow the procedures within the IBM documentation for Upgrading a Db2 pureScale server to upgrade to Db2 version 12.1.0+, or deploy a Db2 pureScale instance in AWS from the AWS Marketplace. Stay tuned for the next blog post in this series! Please reach out if you have any questions or concerns about this blog’s content.

Justina Srebrnjak started at IBM in 2021 as a software developer intern. As of 2023, she graduated with a BEng in Software Engineering from McMaster University and has transitioned to a full-time software developer position in the Db2 pureScale and High Availability team. During her time at IBM, she has focused on integrating Pacemaker with pureScale for Db2 version 12.1.0 and continues to work on improving high availability features for future Db2 versions. Justina can be reached at j.srebrnjak@ibm.com.